It’s become a motif for sociopathic digital executives to name their creations after concepts from science fiction and fantasy novels without grasping the meaning of those concepts within the stories from which they have been taken.

For example, Peter Thiel, who made a fortune as an executive at PayPal, later created a company called Palantir, named after the seeing stones that an evil spirit in the Lord of the Rings used to spy on the world and control people from a distance. In the story, it’s understood that spying on people and controlling them from a distance is a sign of a morally depraved personality. Thiel didn’t seem to understand this. He has made surveillance and control over a distance the core mission of the company Palantir.

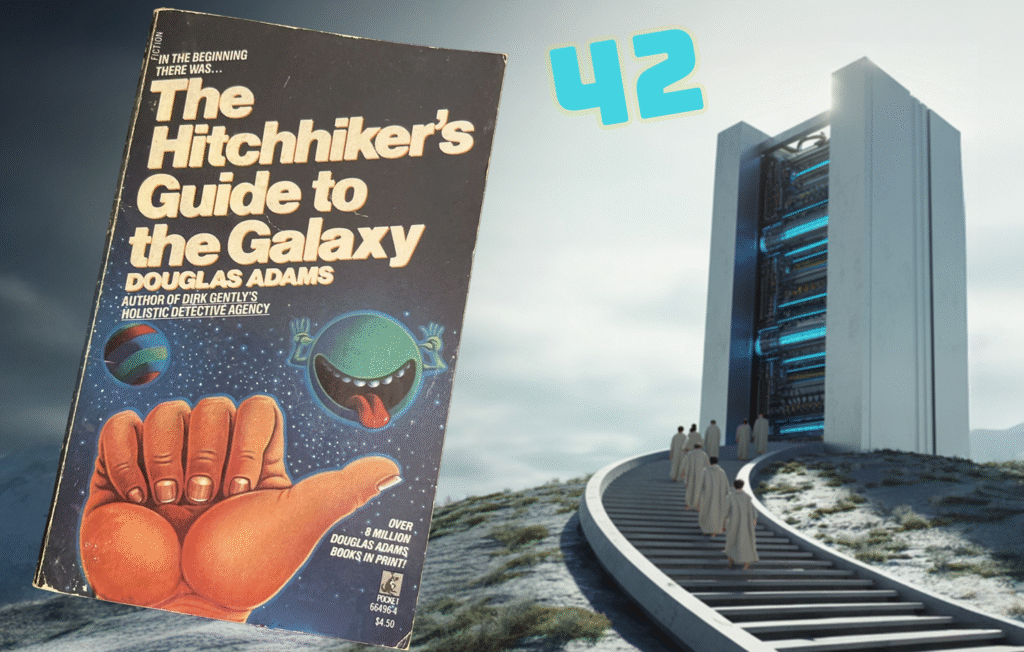

So it is with the digital technology company G42, a firm that specializes in artificial intelligence. G42 is named after a story from The Hitchhiker’s Guide to the Galaxy, by Douglas Adams. In this story, a monastic order of interdimensional beings seek to learn the meaning of “life, the universe, and everything” by building a gigantic supercomputer to work on the problem. After generations and generations of computation, the supercomputer finally announces that it has obtained an answer. The meaning of life, the universe, and everything is… 42. The supercomputer then explains that if people want to understand what the answer of 42 means, they will have to build an even more powerful supercomputer that will reveal the deep cosmic question to which 42 is the answer. That second supercomputer, designed to obtain the cosmic question, is the planet Earth.

It’s a silly story, but there’s a serious point to it. The idea behind the story is that it’s folly to think that even the most powerful computer can tell us about the meaning of life, because the meaning of life is discovered by actually experiencing life. That’s why the second supercomputer had to be a living planet. People discover aspects of the meaning of life through embodied experience that no calculations or artificial simulations can ever grasp.

The people at the company G42 didn’t grasp this aspect of the story. They only noticed that the story involved the creation of a really big, extremely powerful computer capable of providing the answer to any question. They wanted to build a computer like that, so they decided to name their entire company after the story, not understanding that by doing so, they were identifying their own enterprise as a fool’s errand.

This clueless bumble reveals something not-so-impressive about the generative AI industry. Although there is some truly revolutionary technology involved, generative AI ultimately provides us only with imitations of deep thought. There’s a lot of flash and sparkle, but a lack of reflection behind the glamour.

So it is that generative AI companies like G42 have succeeded in gathering a huge amount of money from gullible venture capitalists looking for investment opportunities that appear impressive, while failing miserably to come anywhere close to making a profit. Generative AI will need to make 2 trillion dollars in revenue over the next four years in order to recoup the investments that have been made to enable it to function in its current, significantly flawed form. However, the entire generative AI industry at present generates only about 50 billion dollars in annual revenue.

What’s more, research by MIT found that 95 percent of business projects centered around generative AI completely failed to bring in any revenue at all. A similar study amongst Canadian companies that had adopted generative AI technology came to a similar conclusion: In 93 percent of those companies adoption of AI resulted in zero return on investment.

Does that make the AI industry a failure? Only if its purpose is to make money for investors. One thing the AI industry has been very effective at is disempowering working people, wrecking the non-AI economy, sabotaging journalism and education, and disrupting democracies around the world.

If the actual purpose of the AI industry is to concentrate power in the hands of an extremely small number of people, while destroying the ability of everybody else to have any purchase on political power, then we have to count the AI industry as very, very successful.

There is good reason to think that a digital fascist attack against democracy is at the core of G42’s mission. The truth is that the people at G42 don’t even work very hard to disguise this agenda.

The Fascist Podcast of G42

To show you what I mean, consider what was said during a recent episode of G42’s corporate podcast: G42ONAIR.

This episode claimed to be an exploration of how generative AI can earn people’s trust, despite the widespread chicanery in the AI industry. The title of the episode is “A Deep Dive Into Ethical AI”.

It you listen carefully to this G42 podcast episode, however, you’ll notice that the conversation never gets around to identifying any detailed, credible system of ethics for generative AI businesses. Instead, the episode reveals some very unethical intentions at G42.

The episode is hosted by Amandeep Bhangu, a public speaking consultant who has been hired by G42 and the royal family of the United Arab Emirates (UAE). Actually, the previous sentence is redundant, because G42 is owned and controlled by the royal family of the UAE. G42 is largely a corporate tool of the UAE, which is an old-fashioned monarchy in which the royal family is in absolute control of the country.

The chair of G42 is the national security advisor of the UAE. Tahnoon bin Zayed Al Nahyan is a leading member of the UAE royal family, and the country’s spy chief. His job is to maintain his family’s totalitarian grip on power, and he uses G42 to accomplish that objective.

It’s possible to listen to the G42ONAIR podcast without noticing this brutal reality, because the podcast is a public relations creation designed to create the image of G42 as an ordinary digital technology business with the benevolent mission of making the world a better place. If you accept at face value what the guests on the podcast have to say, you might merely be confused, and wonder why the humanitarianism of G42 is so dramatically different from what you understand of the world.

Let’s not take what the G42 corporate podcast has to say at face value. Let’s look deeper to consider the implications of what its guests have to say.

The first guest on the Deep Dive Into Ethical AI episode of the G42 podcast is a British aristocrat, Baroness Joanna Shields. Joanna Shields has a seat in the United Kingdom’s House of Lords. She got that seat in Parliament not through her own merit, but because she was born to an aristocratic family. You and I could never obtain a seat in the House of Lords, no matter what worthy acts we accomplished, simply because we were not born in an entitled family.

Baroness Joanna Shields is joined by another guest, Martin Edelman. Martin Edelman is not a nobleman in any country. Martin Edelman is a lawyer employed by the royal family of the United Arab Emirates. Marty, as they call him on the podcast, is the Group General Counsel at G42.

You’ve probably already noticed what Shields and Edelman have in common. They both have positions serving systems of power centered around non-democratic hereditary rulers.

Listen to what Edelman and Shields had to say about G42’s applications of generative AI in journalism and education:

Baroness Joanna Shields: We have to think about ways in which we use this technology to get, fact checked solid journalism out to people, which I’m sure you can appreciate.

But, I mean, these are all very important questions, and when you think about them in the context of responsible AI and ethical coding and how we build these models, how we train them, the data we use, we make sure that there’s representative data from every culture and country to make sure that all points of view are respected and incorporated in the model’s thinking, because the model is basically a point of view, and it’s the point of view is driven by the data that’s trained that model.

So we have to think about that, you know, when we’re rolling out systems globally, and, you know, the great thing we have right now is we have great models from OpenAI and other companies, but we have to think about what is the domain, either domain expertise, you know, around the health areas and based on the things that we’ve talked about earlier.

But the other part of it is, how do you integrate the culture and the ethics and the, you know, the mores of societies outside of, you know, how do you bring that into the equation so that that’s represented in the countries that we’re talking about?

Amandeep Bhangu: There’s so many interesting points that you’ve raised there, and I’m pulling all the threads, but the point about culture and ethics and bringing society on board, Martin, your perspective on that?

Marty Edelman: So, the best distribution agent for AI should be TikTok. Because some extraordinary number of people under thirty-five get their talk about enjoyment, satisfaction, and new information from TikTok.

So we have to figure out how you actually use it as a teaching tool, because if we don’t use the sort of most acceptable methods of teaching, if we tell young people, we’re going to teach you our way, they’re going to say, ‘I don’t want to learn. I don’t want to spend the time.’

So I think the process by which we achieve some of the things the Baroness is talking about is as important as the goal of doing it. But it’s very clear, age fourteen, age sixteen, I hate to say it, it’s too late.

Age five, age four, my grandchildren. So here in Abu Dhabi, we just instituted courses in AI starting in the primary grades.

Let’s sum up the core ideas from this segment of the G42 podcast:

- Generative AI will distribute reliable journalism

- Generative AI should be representative of all countries

- OpenAI has produced exemplary generative AI

- Education should take place in the form of generative AI tools that adopt the format of TikTok

- Children should be taught using generative AI technology starting at the age of four

What are the ethical implications of these ideas from G42?

Can Generative AI Be Integrated Ethically In Journalism?

Baroness Joanna Shields claims that generative AI can be a source of “fact checked solid journalism”, but the facts don’t support that claim.

Generative AI technology is thoroughly unreliable as a means of producing reliable journalism. The technology is designed to create a plausible imitation of human language, without any actual human work or thinking behind it. Generative AI routinely produces substantial factual errors, and creates deceptive fake sources for its assertions. That’s the opposite of journalistic integrity. What’s more, the more computationally powerful generative AI models become, the more factually inaccurate they become.

What’s more, journalistic publications are using generative AI as an excuse to fire thousands upon thousands of experienced journalists – 900 in January 2025 alone, according to one count. At the same time, automatically generated, factually unreliable slop has crowded genuine journalism, even as integration of generative AI into the infrastructure of the internet has dramatically reduced traffic to journalistic web sites, creating a devastating drop in revenue.

In short, the result of generative AI deployment has been the systematic reduction in both the quality and quantity of actual fact checked solid journalism. Generative AI has been the opposite of an ethical tool for the promotion of responsible journalism.

Is Generative AI Representative Of All Nations?

Research has consistently found that generative AI models do not represent all nations equally. American perspectives are centralized in generative AI models, and other cultural perspectives are marginalized.

G42 hasn’t solved this problems. Its generative AI systems merely reproduce problems of bias. Through its “sovereign cloud” approach, G42 seeks to help authoritarian governments and powerful corporations to use generative AI to restrict the cultural references available to citizens and users under their control.

Has OpenAI Been An Exemplar Of AI Ethics?

OpenAI has created wealth for a small number of already-wealthy investors by engaging in the largest theft in human history, taking copyrighted works from around the world and using them to destroy professional opportunities for the people who created them in the first place. As one philosopher puts it, “From a strict Aristotelian virtue-ethics standpoint, OpenAI’s actions with respect to the use of the intellectual property of others is immoral and unjustified.”

OpenAI products have been found to create psychotic delusions in large numbers of generative AI users, which can even get to the point of driving people to suicide.

OpenAI has been consistently deceptive in communications about its generative AI models, saying that its data was secure and private, when in fact its generative AI has created massive data insecurity, and supposedly private communications have been available for the entire world to see. What’s more, OpenAI gained market share by promising to operate as a non-profit organization for the good of humanity, only then to shift into a for-profit mode that primarily serves the demands of its investors.

OpenAI has failed to prevent the use of its generative AI tools for malicious and negligent purposes, resulting in an unprecedented wave of cybercrime and social disruption, leaving large numbers of people without the resources they need to survive.

No. OpenAI has not provided a good model of ethics in AI. That G42 operatives should claim otherwise is disturbing.

Is TikTok A Good Model For Children’s Education?

TikTok is a non-interactive medium that is designed to captivate audiences. Its short-form videos fail to inform in a meaningful way, and prevent the development of careful, critical thought by subjecting its users to a rapid stream of non-sequitur messages.

What’s more, TikTok has been taken over by Donald Trump and Larry Ellison, two billionaires who have expressed their intention to use the TikTok algorithm to promote an extremist political ideology.

TikTok is not educational technology. No credible teacher would suggest replacing traditional educational practices with TikTok.

Should Four Year Olds Be Subjected to Generative AI?

A recent report from the Public Interest Research Group found that generative AI products designed for young children instructed them to engage in dangerous activities such as playing with kitchen knives and matches. The same systems also were prone to engage in sexual discussions with children at a level that is not psychologically appropriate for them.

Research has found that generative AI tools reduce educational achievement and weaken the ability of students to engage in critical thinking skills. Students who use generative AI tools in educational settings have more difficulty retaining information and communicating effectively than students who are educated using traditional techniques.

Putting four year olds in front of generative AI devices instead of providing them with human-to-human opportunities to learn is profoundly unethical.

Yet, that’s just what G42 is planning to do. In fact, in the G42 podcast, Martin Edelman describes a plan for G42 and other powerful digital corporations to take over the educational systems of countries around the world in order to sell their generative AI products.

Amandeep Bhangu: Do we think it will be a reality where companies take this on board or do there need to be more teeth to this? Do they need to be regulated?

Marty Edelman: Our society tends to segment responsibility, so, and we break it up between government, the private sector, and then there’s this group of responsible people in between. If we’re going to really be successful in achieving responsible AI, we’re going to have to look at the entire spectrum, and everybody’s going to have to actually cooperate in being responsible.

So we’ve learned government, when government does everything, it becomes oppressive, because in the hands of government, it’s in the hands of people, right? People who have power sometimes are excessive.

So, I think this is really an extraordinary opportunity for the public and private sectors and for people representing children, adults, disabled minorities to actually get in a room almost and talk about what responsible AI means, because it’s really distributing the sense of responsibility and planning how we take AI from where it is today to advanced super intelligence or whatever it’s going to be tomorrow.

Amandeep Bhangu: I really appreciate your comprehensive answer there because you’re taking a very realistic approach of actually getting everybody on board and saying it’s a collaborative effort. Responsibility should be shared.

Marty Edelman: Yeah, and we can potentially, this is potentially, you have a more greater opportunity to do it in the Global South, where the habits and the information about AI are still embryonic, and, therefore, they don’t have institutions that have been built.

Baroness Joanna Shields: That’s right.

Marty Edelman: And so, the government of Indonesia could tell Microsoft and Meta, if you’re going to come here, here’s what you have to do: You have to be part of a consortium that decides what kind of education we’re going to have in our school system.

I mean, the opportunity for real leadership, which you see in the leadership in the UAE, can be replicated. It’s going to be easier for us to convince, let’s say, a country in Africa or in Southeast Asia to try something like this, than it’s going to be to go back to England or France or tell Macron, this is the way you should do something.

Amandeep Bhangu: I think that’s a really fascinating insight that you share there with us, Marty about the Global South, and you’re right to say it links back into what the Baroness was saying, a younger population in general, embryonic, perhaps the AI systems there, so there’s a chance to actually shape more so and a lot more opportunity.

Tell us about, you mentioned though the UAE, what’s happening here, often spoken about as a blueprint in many different sectors. Healthcare might be one of them, but when it comes to AI and responsible intelligence, responsible AI. What can be taken here from this incubation and scaled up?

Marty Edelman: People. So, the leadership in the UAE is extraordinary. I came here 25 years ago, and if you look at what the leadership has done in a country that’s not a democracy, it’s extraordinary, because a sense of responsibility and a sense of commitment to turn modernity into almost a software application for its residents while preserving some of the traditions of the prior generations is really extraordinary. Now, it’s easier to do in a small country, but the model is replicable if people really want to do it.

Martin Edelman says that governments shouldn’t regulate generative AI. Instead, Edelman proposes that national governments should hand over control of their educational infrastructures to consortiums run by AI corporations. Edelman has already made it plain what those AI corporations will do: They’ll replace teachers with generative AI interfaces, placing students into this dystopian nightmare of dehumanization starting at the age of four.

The G42 podcast explains that this process will begin in the “Global South”, because those countries lack the resources to resist. Edelman patronizingly refers to these countries as “embryonic”, and therefore easy to control.

This is what the supposed “ethics” of G42 amount to: A plan to exploit children the world’s most vulnerable nations. To what end?

The Fascist Purpose of G42

It’s important to remember where Martin Edelman is coming from when he tells us “The leadership in the UAE is extraordinary… not a democracy… the model is replicable.” G42 is owned and controlled by the spy chief of the United Arab Emirates, a member of the royal family that controls that country with an iron fist.

Indeed, the United Arab Emirates is not a democracy. It’s a totalitarian regime that engages in systematic human rights abuses. Here’s how Freedom House describes conditions there:

“The United Arab Emirates (UAE) is a federation of seven emirates. Limited elections are held for a federal advisory body, but political parties are banned, and all executive, legislative, and judicial authority ultimately rests with the seven hereditary rulers. The civil liberties of both citizens and noncitizens are subject to significant restrictions.”

Amnesty International writes that “The UAE continued to criminalize the right to freedom of expression through multiple laws and to punish actual or perceived critics of the government” while Human Rights Watch reports “activists, academics, and lawyers are serving lengthy sentences in UAE prisons following unfair trials on vague and broad charges that violate their rights to free expression and association.”

There is no freedom of religion in the UAE. Education in Islam is mandatory, and education in other religions is not allowed. To say or do anything to call Islam into question is against the law.

The Tom Lantos Human Rights Commission concludes that in the United Arab Emirates, “Significant human rights issues included credible reports of: arbitrary or unlawful killing; cruel, inhuman, or degrading treatment or punishment by the government; harsh and life-threatening prison conditions; arbitrary detention; political prisoners or detainees; transnational repression against individuals in another country; arbitrary or unlawful interference with privacy; punishment of family members for alleged offenses by a relative; serious restrictions on freedom of expression and media freedom, including censorship, and enforcement and threat to enforce criminal libel laws to limit expression; serious restrictions on internet freedom; substantial interference with the freedom of peaceful assembly and freedom of association; restrictions on freedom of movement and residence within the territory of a state and on the right to leave the country; inability of citizens to change their government peacefully through free and fair elections; serious and unreasonable restrictions on political participation; serious government restrictions on domestic and international human rights organizations; unenforced laws criminalizing consensual same-sex sexual conduct between adults; and prohibiting independent trade unions or significant or systematic restrictions on workers’ freedom of association. The government did not take credible steps to identify and punish officials who may commit human rights abuses.”

You get the picture. The government of United Arab Emirates is brutal and repressive.

Martin Edelman, G42’s top lawyer, speaks glowingly of the idea of spreading the UAE’s approach to non-democratic “leadership” around the world.

G42, as an instrument of the UAE’s government, is developing plans to use generative artificial intelligence to disrupt education around the world and spread totalitarian control.

This is how G42 talks when it’s attempting to sound ethical.

Of course, G42 is not alone. It’s becoming increasingly obvious that the people in control of the development of generative AI technology are seeking power for themselves, and are willing to smash the systems of democratic liberty that get in their way.

In the United States, they support the fascism of Donald Trump. In the UAE, they support the dictatorial family of Tahnoon bin Zayed Al Nahyan.

They’re building gigantic computers in data centers, not for the benefit of humanity, but in order to gain fascist control over life, the universe, and everything.